How to Automate Code Review with Git Hooks

Checkout the GitHub Repository

https://github.com/taskautomation/ai-code-review

Seems like there is money to be made from automating code reviews. When I google "automated code review" or "AI code review", the results are overwhelming. Codebeat, SonarSource, JetBrains and more have professional products for this. But this is too heavy duty for my needs. I am not looking to integrate a whole new tool into my workflow. Just something simple to check my code for obvious mistakes, good formatting and make some suggestions on how to improve it. I also want to be an automated part of my existing work flow, which consists of writing some code, testing it locally, commiting and pushing it to GitHub and testing on a deployed version.

Using git hooks

If you look in your repo (any git repo), there is a folder called .git. In this folder, it is a subfolder called hooks. Inside this folder there is a set of files. Inside this folder there is a set of files

Mode LastWriteTime Length Name

---- ------------- ------ ----

-a---- 8/14/2024 7:52 PM 478 applypatch-msg.sample

-a---- 8/14/2024 7:52 PM 896 commit-msg.sample

-a---- 8/14/2024 7:52 PM 4726 fsmonitor-watchman.sample

-a---- 8/14/2024 7:52 PM 189 post-update.sample

-a---- 8/14/2024 7:52 PM 424 pre-applypatch.sample

-a---- 8/14/2024 7:52 PM 1643 pre-commit.sample

-a---- 8/14/2024 7:52 PM 416 pre-merge-commit.sample

-a---- 8/14/2024 7:52 PM 1374 pre-push.sample

-a---- 8/14/2024 7:52 PM 4898 pre-rebase.sample

-a---- 8/14/2024 7:52 PM 544 pre-receive.sample

-a---- 8/14/2024 7:52 PM 1492 prepare-commit-msg.sample

-a---- 8/14/2024 7:52 PM 2783 push-to-checkout.sample

-a---- 8/14/2024 7:52 PM 3650 update.sample

These are scripts that are triggered by different events. At least some of the names are self-explanatory. The pre-commit is triggered by the commit command, and is run before the actual commit. The pre-push is triggered by the push etc. There might be several good ways to do this, depending on your or your teams workflow. I will be using the post-commit hook, so that I can ammend or add a new commit before pushing my code to the remote.

Activating the post-commit hook.

Lets just create a new repo to test. The code below creates a new folder as a git repo and commits a created file.

mkdir git-hook-test

cd git-hook-test

New-Item readme.md -ItemType "File"

git init

git add .

git commit -m "Initial commit"

No, lets create the post-commit hook, add some commands to it and try to trigger it.

New-Item -Path ".\.git\hooks\post-commit" -ItemType "File"

Open the file as write something like this:

#!/bin/sh

echo "executing post-commit 333333"

exit 0

It turns out that the correct SHEBANG is important here for it to work.

Then make some changes to the readme.md and try to add and commit them. I'm just writing hello in readme.md. Then I add and commit:

git add readme.md

git commit -m "testing post-commit hook"

Behold my output:

executing post-commit 333333

[master aad9d2f] testing post-commit hook

1 file changed, 1 insertion(+)

Create virtual environment

I want the web hook to run a python script in a specific virtual environment (as always), so it doesn't break if we update any packages or something like that. Let's go ahead and create a create a virtual environment called .venv in our repo, and activate it and install some needed python packages. I will be using OpenAIs GPT-4o (you need an API key for this, create one here).

python -m venv .venv

.\.venv\Script\Activate.ps1

pip install langchain langchain-core langchain-community langchain-openai

Create a python file which we will run with out hook, post-commit.py

print("Hello world")

Set up the hook that runs a python script within the virtual environment. Since it is a post-commit hook, we also want to pass the rev-parse of HEAD^ and HEAD. This is just the commit hashes of the two commits we want to compare and analyze difference between.

#!/bin/sh

echo "executing post-commit"

powershell.exe -Command ". .venv\\Scripts\\Activate.ps1"

python post-commit.py

exit 0

This is my output

(.venv) PS C:\Users\fredr\git-hook-test> git commit -m "updated script"

executing post-commit

Hello world!!!!!

[master d1cc364] updated script

1 file changed, 1 insertion(+), 1 deletion(-)

Let's get more fancy and pass some arguments to the python script. The arguments are the hashes of the commits we want to compare - the previous and the current. From there we will use subprocess and run git diff from python and use the output. Start with updating the python script (post-commit.py).

import sys

import os

import subprocess

commit1 = sys.argv[1]

commit2 = sys.argv[2]

git_diff_command = f"git diff {commit1} {commit2}"

# Use the current working directory as the repository path

cwd = os.getcwd()

# Execute the git diff command and get the output

diff_output = subprocess.check_output(git_diff_command, shell=True, cwd=cwd).decode("utf-8")

# Print the output

print(diff_output)

Also change the post-commit hook to pass the two commits as arguments.

#!/bin/sh

echo "executing post-commit"

commit1=$(git rev-parse HEAD^)

commit2=$(git rev-parse HEAD)

powershell.exe -Command ". .venv\\Scripts\\Activate.ps1"

python post-commit.py commit1 commit2

exit 0

My output:

(.venv) PS C:\Users\fredr\git-hook-test> git add .\post-commit.py

(.venv) PS C:\Users\fredr\git-hook-test> git commit -m "update"

executing post-commit

diff --git a/post-commit.py b/post-commit.py

index b6cf2ec..f5d58d3 100644

--- a/post-commit.py

+++ b/post-commit.py

@@ -13,4 +13,5 @@ cwd = os.getcwd()

# Execute the git diff command and get the output

diff_output = subprocess.check_output(git_diff_command, shell=True, cwd=cwd).decode("utf-8")

+# Print the output

print(diff_output)

\ No newline at end of file

[master b5eba10] update

1 file changed, 1 insertion(+)

So the output is pretty meta, it is the output of git diff of our file that prints the diff. Luckily the LLMs will think this is nice and understandable and can help us out.

To understand the output better you can look at your commit history and play around with the diffs.

(.venv) PS C:\Users\fredr\git-hook-test> git reflog

b5eba10 (HEAD -> master) HEAD@{0}: commit: update

3169596 HEAD@{1}: commit: added some more code

d1cc364 HEAD@{2}: commit: updated script

19497f7 HEAD@{3}: commit: updated script

c2fe0c4 HEAD@{4}: commit: updated script

db6d4ef HEAD@{5}: commit: updated script

42297f4 HEAD@{6}: commit: updated script

bf8763a HEAD@{7}: commit: added post commit

76a04fe HEAD@{8}: commit: Added ignore

dcc152f HEAD@{9}: commit: testing testing

81fc52f HEAD@{10}: commit: new test ffs

ea50e68 HEAD@{11}: commit: new test

a8140bb HEAD@{12}: commit: testing post-commit hook

fa0a5cb HEAD@{13}: commit (initial): Initial commit

(.venv) PS C:\Users\fredr\git-hook-test> git diff 3169596 b5eba10

diff --git a/post-commit.py b/post-commit.py

index b6cf2ec..f5d58d3 100644

--- a/post-commit.py

+++ b/post-commit.py

@@ -13,4 +13,5 @@ cwd = os.getcwd()

# Execute the git diff command and get the output

diff_output = subprocess.check_output(git_diff_command, shell=True, cwd=cwd).decode("utf-8")

+# Print the output

print(diff_output)

\ No newline at end of file

Involve LLM - Passing the diff

In order to pass the output of the git diff with some instructions we use a prompt template. Let's first start with some basic instructions and then insert the git diff output in an f-string. Then pass the prompt through a chain consisting of the prompt, LLM and output parser.

import sys

import os

import subprocess

from langchain.prompts import PromptTemplate

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

commit1 = sys.argv[1]

commit2 = sys.argv[2]

git_diff_command = f"git diff {commit1} {commit2}"

# Use the current working directory as the repository path

cwd = os.getcwd()

# Execute the git diff command and get the output

diff_output = subprocess.check_output(git_diff_command, shell=True, cwd=cwd).decode("utf-8")

prompt = """

You are an expert developer and git super user. You do code reviews based on the git diff output between two commits. Complete the following tasks, and be extremely critical and precise in your review:

* [Description] Describe the code change.

* [Obvious errors] Look for obvious errors in the code.

* [Improvements] Suggest improvements where relevant.

* [Friendly advice] Give some friendly advice or heads up where relevant.

* [Stop when done] Stop when you are done with the review.

This is the git diff output between two commits: \n\n {diff}

AI OUTPUT:

"""

llm = ChatOpenAI(

model="gpt-4o",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

)

output_parser = StrOutputParser()

PROMPT = PromptTemplate(template=prompt, input_variables=["diff"])

chain = PROMPT | llm | output_parser

print("Running diffs...")

results = chain.invoke({"diff": diff_output})

print(results)

This is what the LLM things about my code:

(.venv) PS C:\Users\fredr\git-hook-test> git commit -m "Added llm instructions"

executing post-commit

Running diffs...

### [Description]

The code change introduces several new imports and a significant extension to the functionality of the script. The new imports are from the `langchain` and `langchain_openai` libraries. The script now constructs a prompt for an AI model to perform a code review based on the git diff output between two commits. It sets up a language model (ChatOpenAI) and an output parser (StrOutputParser), and then uses these to process the diff output and generate a review. The final results are printed to the console.

### [Obvious errors]

1. **Import Error**: The import statement `from langchain_openai import ChatOpenAI` should be `from langchain.openai import ChatOpenAI` based on the typical module structure of `langchain`.

2. **Model Name**: The model name `"gpt-4o"` is not a standard model name. It should be verified if this is a typo or if the correct model name should be used.

3. **No newline at end of file**: The file does not end with a newline, which is generally considered a good practice in many coding standards.

### [Improvements]

1. **Error Handling**: The script lacks error handling for the subprocess call and the AI model invocation. Adding try-except blocks would make the script more robust.

2. **Configuration**: The parameters for `ChatOpenAI` (like `temperature`, `max_tokens`, etc.) should ideally be configurable via command-line arguments or a configuration file.

3. **Logging**: Instead of using print statements, consider using the `logging` module for better control over logging levels and outputs.

4. **Code Comments**: Adding comments to explain the purpose of each major block of code would improve readability and maintainability.

### [Friendly advice]

1. **Dependency Management**: Ensure that the `langchain` and `openai` libraries are properly documented in your `requirements.txt` or equivalent dependency management file.

2. **Testing**: Before deploying this script, thoroughly test it with different diff outputs to ensure it handles various edge cases gracefully.

3. **Documentation**: Update the script's documentation to reflect the new functionality and dependencies.

### [Stop when done]

Review complete.

[master 3443eae] Added llm instructions

1 file changed, 34 insertions(+), 2 deletions(-)

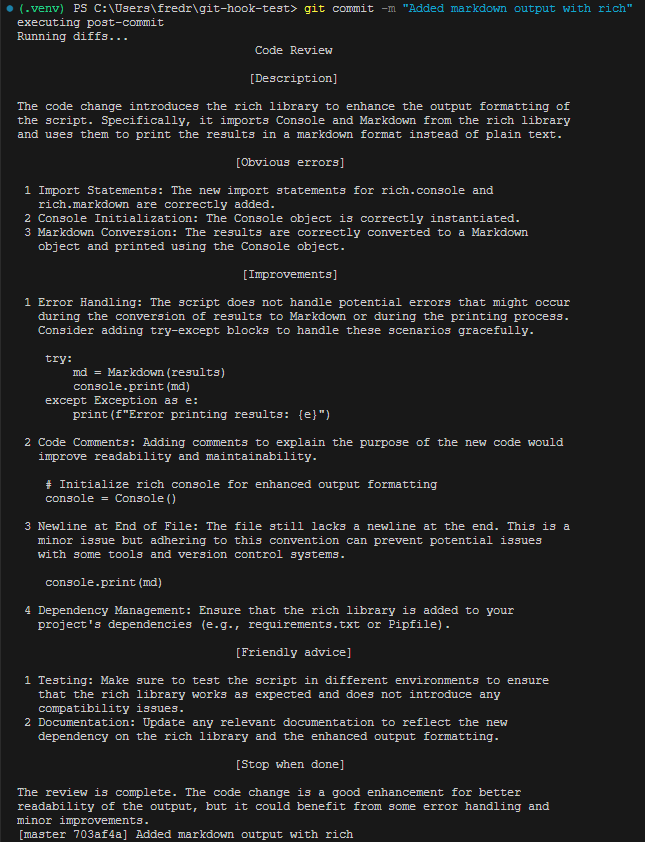

Formatting the output

To make it easier to read the output of the LLM we are going to take advantage of that the LLM is producing markdown as output. Let's use the rich python package to print the markdown nicely to our console. First install rich to your virtual environment.

pip install rich

Add the code for printing markdown.

import sys

import os

import subprocess

from langchain.prompts import PromptTemplate

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import StrOutputParser

from rich.console import Console

from rich.markdown import Markdown

console = Console()

commit1 = sys.argv[1]

commit2 = sys.argv[2]

git_diff_command = f"git diff {commit1} {commit2}"

# Use the current working directory as the repository path

cwd = os.getcwd()

# Execute the git diff command and get the output

diff_output = subprocess.check_output(git_diff_command, shell=True, cwd=cwd).decode("utf-8")

prompt = """

You are an expert developer and git super user. You do code reviews based on the git diff output between two commits. Complete the following tasks, and be extremely critical and precise in your review:

* [Description] Describe the code change.

* [Obvious errors] Look for obvious errors in the code.

* [Improvements] Suggest improvements where relevant.

* [Friendly advice] Give some friendly advice or heads up where relevant.

* [Stop when done] Stop when you are done with the review.

This is the git diff output between two commits: \n\n {diff}

AI OUTPUT:

"""

llm = ChatOpenAI(

model="gpt-4o",

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2,

)

output_parser = StrOutputParser()

PROMPT = PromptTemplate(template=prompt, input_variables=["diff"])

chain = PROMPT | llm | output_parser

print("Running diffs...")

results = chain.invoke({"diff": diff_output})

md = Markdown(results)

console.print(md)

This is how it looks to me:

Summary

This is my MVP automated code review setup. It easily be extended to run once per changed file, make it refactore the code or follow some completely other instructions. Play around with it and let me know what you think. Thanks for reading!

Subscribe to my newsletter.

Get updates on my latest articles and tutorials.

- Weekly articles

- I aim to publish new content every week. I will also share interesting articles and tutorials on my blog.

- No spam

- You will not receive any spam emails. I will only send you the content that you are interested in.